CheapASPNETHostingReview.com | Best and cheap ASP.NET 4.5 hosting. This tutorial will teach you the basics of building an asynchronous ASP.NET Web Forms application using Visual Studio Express 2012 for Web, which is a free version of Microsoft Visual Studio. You can also use Visual Studio 2012.

ASP.NET 4.5 Web Pages in combination .NET 4.5 enables you to register asynchronous methods that return an object of type Task. The .NET Framework 4 introduced an asynchronous programming concept referred to as a Task and ASP.NET 4.5 supports Task. Tasks are represented by the Task type and related types in the System.Threading.Tasks namespace. The .NET Framework 4.5 builds on this asynchronous support with the await and async keywords that make working with Task objects much less complex than previous asynchronous approaches. The awaitkeyword is syntactical shorthand for indicating that a piece of code should asynchronously wait on some other piece of code. The async keyword represents a hint that you can use to mark methods as task-based asynchronous methods. The combination of await, async, and the Taskobject makes it much easier for you to write asynchronous code in .NET 4.5. The new model for asynchronous methods is called the Task-based Asynchronous Pattern (TAP). This tutorial assumes you have some familiarity with asynchronous programing using await and asynckeywords and the Task namespace.

How Requests Are Processed by the Thread Pool

On the web server, the .NET Framework maintains a pool of threads that are used to service ASP.NET requests. When a request arrives, a thread from the pool is dispatched to process that request. If the request is processed synchronously, the thread that processes the request is busy while the request is being processed, and that thread cannot service another request.

This might not be a problem, because the thread pool can be made large enough to accommodate many busy threads. However, the number of threads in the thread pool is limited (the default maximum for .NET 4.5 is 5,000). In large applications with high concurrency of long-running requests, all available threads might be busy. This condition is known as thread starvation. When this condition is reached, the web server queues requests. If the request queue becomes full, the web server rejects requests with an HTTP 503 status (Server Too Busy). The CLR thread pool has limitations on new thread injections. If concurrency is bursty (that is, your web site can suddenly get a large number of requests) and all available request threads are busy because of backend calls with high latency, the limited thread injection rate can make your application respond very poorly. Additionally, each new thread added to the thread pool has overhead (such as 1 MB of stack memory). A web application using synchronous methods to service high latency calls where the thread pool grows to the .NET 4.5 default maximum of 5, 000 threads would consume approximately 5 GB more memory than an application able the service the same requests using asynchronous methods and only 50 threads. When you’re doing asynchronous work, you’re not always using a thread. For example, when you make an asynchronous web service request, ASP.NET will not be using any threads between the asyncmethod call and the await. Using the thread pool to service requests with high latency can lead to a large memory footprint and poor utilization of the server hardware.

Processing Asynchronous Requests

In web applications that see a large number of concurrent requests at start-up or has a bursty load (where concurrency increases suddenly), making web service calls asynchronous will increase the responsiveness of your application. An asynchronous request takes the same amount of time to process as a synchronous request. For example, if a request makes a web service call that requires two seconds to complete, the request takes two seconds whether it is performed synchronously or asynchronously. However, during an asynchronous call, a thread is not blocked from responding to other requests while it waits for the first request to complete. Therefore, asynchronous requests prevent request queuing and thread pool growth when there are many concurrent requests that invoke long-running operations.

Choosing Synchronous or Asynchronous Methods

This section lists guidelines for when to use synchronous or asynchronous Methods. These are just guidelines; examine each application individually to determine whether asynchronous methods help with performance.

In general, use synchronous methods for the following conditions:

- The operations are simple or short-running.

- Simplicity is more important than efficiency.

- The operations are primarily CPU operations instead of operations that involve extensive disk or network overhead. Using asynchronous methods on CPU-bound operations provides no benefits and results in more overhead.

In general, use asynchronous methods for the following conditions:

- You’re calling services that can be consumed through asynchronous methods, and you’re using .NET 4.5 or higher.

- The operations are network-bound or I/O-bound instead of CPU-bound.

- Parallelism is more important than simplicity of code.

- You want to provide a mechanism that lets users cancel a long-running request.

- When the benefit of switching threads out weights the cost of the context switch. In general, you should make a method asynchronous if the synchronous method blocks the ASP.NET request thread while doing no work. By making the call asynchronous, the ASP.NET request thread is not blocked doing no work while it waits for the web service request to complete.

- Testing shows that the blocking operations are a bottleneck in site performance and that IIS can service more requests by using asynchronous methods for these blocking calls.

The downloadable sample shows how to use asynchronous methods effectively. The sample provided was designed to provide a simple demonstration of asynchronous programming in ASP.NET 4.5. The sample is not intended to be a reference architecture for asynchronous programming in ASP.NET. The sample program calls ASP.NET Web APImethods which in turn call Task.Delay to simulate long-running web service calls. Most production applications will not show such obvious benefits to using asynchronous Methods.

Few applications require all methods to be asynchronous. Often, converting a few synchronous methods to asynchronous methods provides the best efficiency increase for the amount of work required.

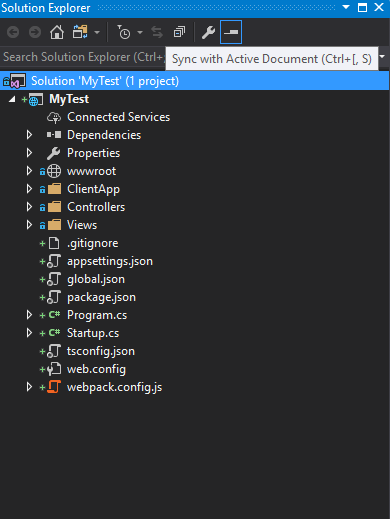

The Sample Application

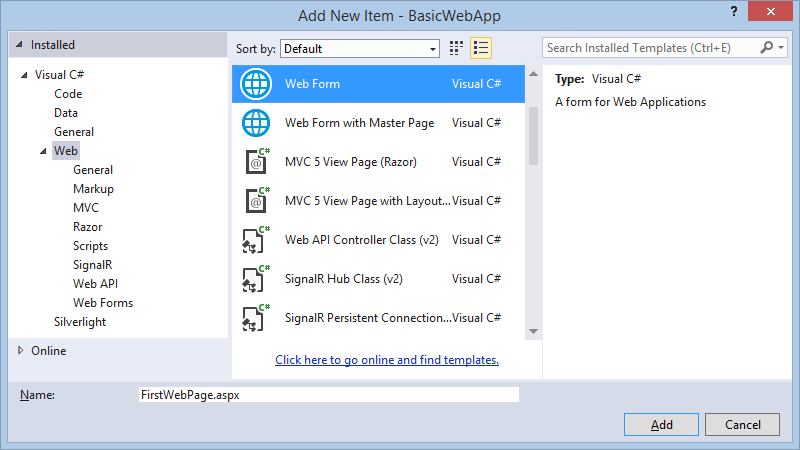

You can download the sample application from https://github.com/RickAndMSFT/Async-ASP.NETon the GitHub site. The repository consists of three projects:

- WebAppAsync: The ASP.NET Web Forms project that consumes the Web API WebAPIpwgservice. Most of the code for this tutorial is from the this project.

- WebAPIpgw: The ASP.NET MVC 4 Web API project that implements the

Products, Gizmos and Widgetscontrollers. It provides the data for the WebAppAsyncproject and the Mvc4Async project. - Mvc4Async: The ASP.NET MVC 4 project that contains the code used in another tutorial. It makes Web API calls to the WebAPIpwg service.

The Gizmos Synchronous Page

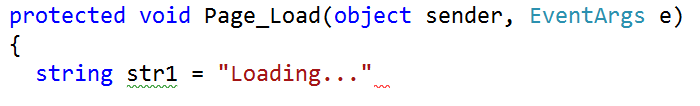

The following code shows the Page_Load synchronous method that is used to display a list of gizmos. (For this article, a gizmo is a fictional mechanical device.)

1 2 3 4 5 6 7 8 9 10 | <span class="hljs-keyword">public</span> <span class="hljs-keyword">partial</span> <span class="hljs-keyword">class</span> <span class="hljs-title">Gizmos</span> : <span class="hljs-title">System</span>.<span class="hljs-title">Web</span>.<span class="hljs-title">UI</span>.<span class="hljs-title">Page</span> { <span class="hljs-function"><span class="hljs-keyword">protected</span> <span class="hljs-keyword">void</span> <span class="hljs-title">Page_Load</span>(<span class="hljs-params"><span class="hljs-keyword">object</span> sender, EventArgs e</span>) </span>{ <span class="hljs-keyword">var</span> gizmoService = <span class="hljs-keyword">new</span> GizmoService(); GizmoGridView.DataSource = gizmoService.GetGizmos(); GizmoGridView.DataBind(); } } |

The following code shows the GetGizmos method of the gizmo service.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | <span class="hljs-keyword">public</span> <span class="hljs-keyword">class</span> <span class="hljs-title">GizmoService</span> { <span class="hljs-keyword">public</span> <span class="hljs-keyword">async</span> Task<List<Gizmo>> GetGizmosAsync( <span class="hljs-comment">// Implementation removed.</span> <span class="hljs-function"><span class="hljs-keyword">public</span> List<Gizmo> <span class="hljs-title">GetGizmos</span>() </span>{ <span class="hljs-keyword">var</span> uri = Util.getServiceUri(<span class="hljs-string">"Gizmos"</span>); <span class="hljs-keyword">using</span> (WebClient webClient = <span class="hljs-keyword">new</span> WebClient()) { <span class="hljs-keyword">return</span> JsonConvert.DeserializeObject<List<Gizmo>>( webClient.DownloadString(uri) ); } } } |

The GizmoService GetGizmos method passes a URI to an ASP.NET Web API HTTP service which returns a list of gizmos data. The WebAPIpgw project contains the implementation of the Web API gizmos, widget and product controllers.

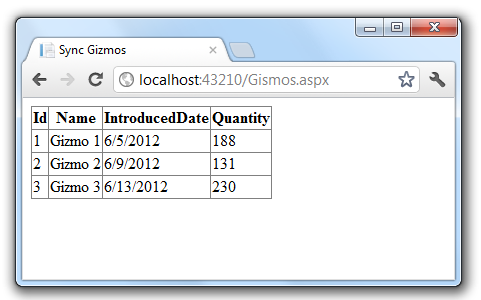

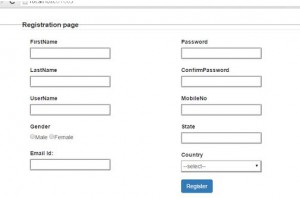

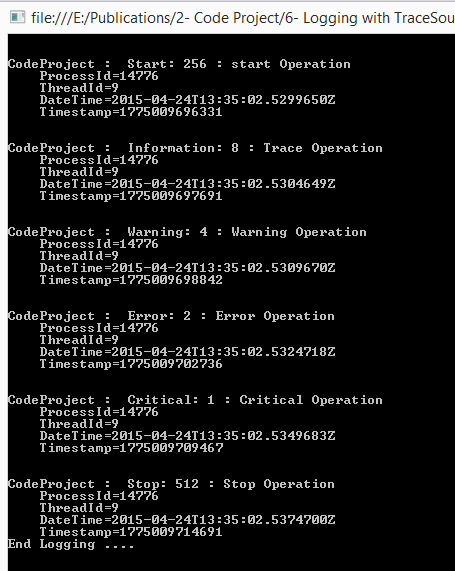

The following image shows the gizmos page from the sample project.

Creating an Asynchronous Gizmos Page

The sample uses the new async and await keywords (available in .NET 4.5 and Visual Studio 2012) to let the compiler be responsible for maintaining the complicated transformations necessary for asynchronous programming. The compiler lets you write code using the C#’s synchronous control flow constructs and the compiler automatically applies the transformations necessary to use callbacks in order to avoid blocking threads.

ASP.NET asynchronous pages must include the Page directive with the Async attribute set to “true”. The following code shows the Page directive with the Async attribute set to “true” for the GizmosAsync.aspx page.

1 2 | <%@ Page Async="true" Language="C#" AutoEventWireup="true" CodeBehind="GizmosAsync.aspx.cs" Inherits="WebAppAsync.GizmosAsync" %> |

The following code shows the Gizmos synchronous Page_Load method and the GizmosAsyncasynchronous page. If your browser supports the HTML 5 <mark> element, you’ll see the changes in GizmosAsync in yellow highlight.

1 2 3 4 5 6 | protected void Page_Load(object sender, EventArgs e) { var gizmoService = new GizmoService(); GizmoGridView.DataSource = gizmoService.GetGizmos(); GizmoGridView.DataBind(); } |

The asynchronous version:

1 2 3 4 5 6 7 8 9 10 11 | protected void Page_Load(object sender, EventArgs e) { RegisterAsyncTask(new PageAsyncTask(GetGizmosSvcAsync)); } private async Task GetGizmosSvcAsync() { var gizmoService = new GizmoService(); GizmosGridView.DataSource = await gizmoService.GetGizmosAsync(); GizmosGridView.DataBind(); } |

The following changes were applied to allow the GizmosAsync page be asynchronous.

- The Page directive must have the

Asyncattribute set to “true”. - The

RegisterAsyncTaskmethod is used to register an asynchronous task containing the code which runs asynchronously. - The new

GetGizmosSvcAsyncmethod is marked with the async keyword, which tells the compiler to generate callbacks for parts of the body and to automatically create aTaskthat is returned. - “Async” was appended to the asynchronous method name. Appending “Async” is not required but is the convention when writing asynchronous methods.

- The return type of the new new

GetGizmosSvcAsyncmethod isTask. The return type ofTaskrepresents ongoing work and provides callers of the method with a handle through which to wait for the asynchronous operation’s completion. - The await keyword was applied to the web service call.

- The asynchronous web service API was called (

GetGizmosAsync).

Inside of the GetGizmosSvcAsync method body another asynchronous method, GetGizmosAsync is called. GetGizmosAsync immediately returns a Task<List<Gizmo>> that will eventually complete when the data is available. Because you don’t want to do anything else until you have the gizmo data, the code awaits the task (using the await keyword). You can use the await keyword only in methods annotated with the async keyword.

The await keyword does not block the thread until the task is complete. It signs up the rest of the method as a callback on the task, and immediately returns. When the awaited task eventually completes, it will invoke that callback and thus resume the execution of the method right where it left off. For more information on using the await and async keywords and the Task namespace, see the async references section.

The following code shows the GetGizmos and GetGizmosAsync methods.

1 2 3 4 5 6 7 8 9 10 11 | <span class="hljs-function"><span class="hljs-keyword">public</span> List<Gizmo> <span class="hljs-title">GetGizmos</span>() </span>{ <span class="hljs-keyword">var</span> uri = Util.getServiceUri(<span class="hljs-string">"Gizmos"</span>); <span class="hljs-keyword">using</span> (WebClient webClient = <span class="hljs-keyword">new</span> WebClient()) { <span class="hljs-keyword">return</span> JsonConvert.DeserializeObject<List<Gizmo>>( webClient.DownloadString(uri) ); } } |

1 2 3 4 5 6 7 8 9 10 | <span class="line-highlight"><span class="hljs-keyword">public</span> <span class="hljs-keyword">async</span> Task<List<Gizmo>> GetGizmosAsync()</span> { <span class="hljs-keyword">var</span> uri = Util.getServiceUri(<span class="hljs-string">"Gizmos"</span>); <span class="line-highlight"> <span class="hljs-keyword">using</span> (HttpClient httpClient = <span class="hljs-keyword">new</span> HttpClient()) { <span class="hljs-keyword">var</span> response = <span class="hljs-keyword">await</span> httpClient.GetAsync(uri); <span class="hljs-keyword">return</span> (<span class="hljs-keyword">await</span> response.Content.ReadAsAsync<List<Gizmo>>()); }</span> } |

The asynchronous changes are similar to those made to the GizmosAsync above.

- The method signature was annotated with the async keyword, the return type was changed to

Task<List<Gizmo>>, and Async was appended to the method name. - The asynchronous HttpClient class is used instead of the synchronous WebClient class.

- The await keyword was applied to the HttpClientGetAsync asynchronous method.

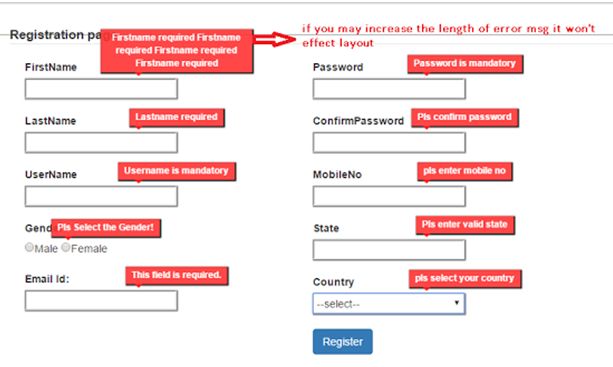

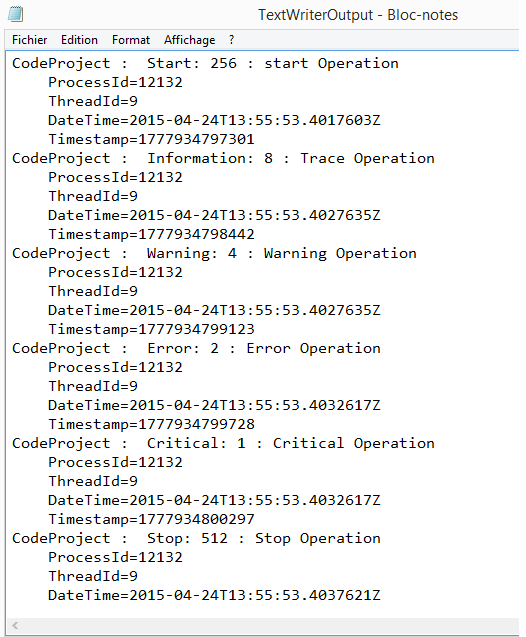

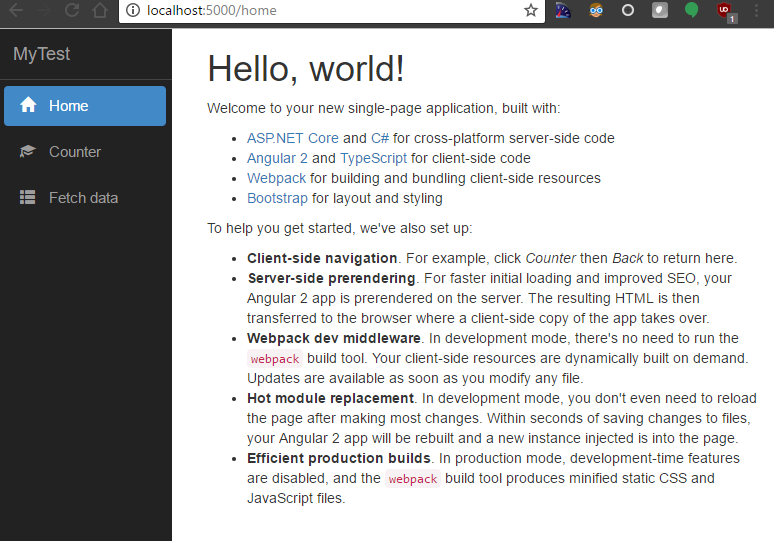

The following image shows the asynchronous gizmo view.

The browsers presentation of the gizmos data is identical to the view created by the synchronous call. The only difference is the asynchronous version may be more performant under heavy loads.

RegisterAsyncTask Notes

Methods hooked up with RegisterAsyncTask will run immediately after PreRender. You can also use async void page events directly, as shown in the following code:

1 2 3 4 5 | <span class="hljs-function"><span class="hljs-keyword">protected</span> <span class="hljs-keyword">async</span> <span class="hljs-keyword">void</span> <span class="hljs-title">Page_Load</span>(<span class="hljs-params"><span class="hljs-keyword">object</span> sender, EventArgs e</span>) </span>{ <span class="hljs-keyword">await</span> ...; <span class="hljs-comment">// do work</span> } |

The downside to async void events is that developers no longer has full control over when events execute. For example, if both an .aspx and a .Master define Page_Load events and one or both of them are asynchronous, the order of execution can’t be guaranteed. The same indeterminiate order for non event handlers (such as async void Button_Click ) applies. For most developers this should be acceptable, but those who require full control over the order of execution should only use APIs like RegisterAsyncTask that consume methods which return a Task object.

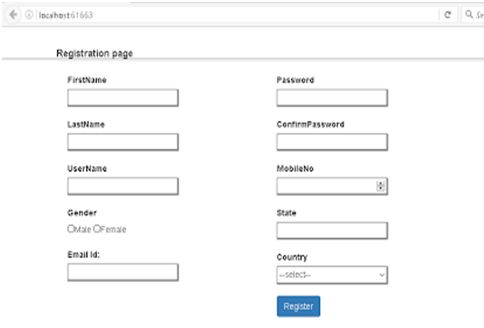

Performing Multiple Operations in Parallel

Asynchronous Methods have a significant advantage over synchronous methods when an action must perform several independent operations. In the sample provided, the synchronous page PWG.aspx(for Products, Widgets and Gizmos) displays the results of three web service calls to get a list of products, widgets, and gizmos. The ASP.NET Web API project that provides these services uses Task.Delay to simulate latency or slow network calls. When the delay is set to 500 milliseconds, the asynchronous PWGasync.aspx page takes a little over 500 milliseconds to complete while the synchronous PWG version takes over 1,500 milliseconds. The synchronous PWG.aspx page is shown in the following code.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | <span class="hljs-function"><span class="hljs-keyword">protected</span> <span class="hljs-keyword">void</span> <span class="hljs-title">Page_Load</span>(<span class="hljs-params"><span class="hljs-keyword">object</span> sender, EventArgs e</span>) </span>{ Stopwatch stopWatch = <span class="hljs-keyword">new</span> Stopwatch(); stopWatch.Start(); <span class="hljs-keyword">var</span> widgetService = <span class="hljs-keyword">new</span> WidgetService(); <span class="hljs-keyword">var</span> prodService = <span class="hljs-keyword">new</span> ProductService(); <span class="hljs-keyword">var</span> gizmoService = <span class="hljs-keyword">new</span> GizmoService(); <span class="hljs-keyword">var</span> pwgVM = <span class="hljs-keyword">new</span> ProdGizWidgetVM( widgetService.GetWidgets(), prodService.GetProducts(), gizmoService.GetGizmos() ); WidgetGridView.DataSource = pwgVM.widgetList; WidgetGridView.DataBind(); ProductGridView.DataSource = pwgVM.prodList; ProductGridView.DataBind(); GizmoGridView.DataSource = pwgVM.gizmoList; GizmoGridView.DataBind(); stopWatch.Stop(); ElapsedTimeLabel.Text = String.Format(<span class="hljs-string">"Elapsed time: {0}"</span>, stopWatch.Elapsed.Milliseconds / <span class="hljs-number">1000.0</span>); } |

The asynchronous PWGasync code behind is shown below.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 | <span class="hljs-function"><span class="hljs-keyword">protected</span> <span class="hljs-keyword">void</span> <span class="hljs-title">Page_Load</span>(<span class="hljs-params"><span class="hljs-keyword">object</span> sender, EventArgs e</span>) </span>{ Stopwatch stopWatch = <span class="hljs-keyword">new</span> Stopwatch(); stopWatch.Start(); <span class="line-highlight"> RegisterAsyncTask(<span class="hljs-keyword">new</span> PageAsyncTask(GetPWGsrvAsync));</span> stopWatch.Stop(); ElapsedTimeLabel.Text = String.Format(<span class="hljs-string">"Elapsed time: {0}"</span>, stopWatch.Elapsed.Milliseconds / <span class="hljs-number">1000.0</span>); } <span class="line-highlight"><span class="hljs-function"><span class="hljs-keyword">private</span> <span class="hljs-keyword">async</span> Task <span class="hljs-title">GetPWGsrvAsync</span>()</span> </span>{ <span class="hljs-keyword">var</span> widgetService = <span class="hljs-keyword">new</span> WidgetService(); <span class="hljs-keyword">var</span> prodService = <span class="hljs-keyword">new</span> ProductService(); <span class="hljs-keyword">var</span> gizmoService = <span class="hljs-keyword">new</span> GizmoService(); <span class="hljs-keyword">var</span> widgetTask = widgetService.GetWidgetsAsync(); <span class="hljs-keyword">var</span> prodTask = prodService.GetProductsAsync(); <span class="hljs-keyword">var</span> gizmoTask = gizmoService.GetGizmosAsync(); <span class="line-highlight"> <span class="hljs-keyword">await</span> Task.WhenAll(widgetTask, prodTask, gizmoTask);</span> <span class="hljs-keyword">var</span> pwgVM = <span class="hljs-keyword">new</span> ProdGizWidgetVM( widgetTask.Result, prodTask.Result, gizmoTask.Result ); WidgetGridView.DataSource = pwgVM.widgetList; WidgetGridView.DataBind(); ProductGridView.DataSource = pwgVM.prodList; ProductGridView.DataBind(); GizmoGridView.DataSource = pwgVM.gizmoList; GizmoGridView.DataBind(); } |

Using a Cancellation Token

Asynchronous Methods returning Taskare cancelable, that is they take a CancellationTokenparameter when one is provided with the AsyncTimeout attribute of the Page directive. The following code shows the GizmosCancelAsync.aspx page with a timeout of on second.

1 2 3 4 5 | <span class="line-highlight"><%@<span class="hljs-built_in"> Page </span> <span class="hljs-attribute">Async</span>=<span class="hljs-string">"true"</span> <span class="hljs-attribute">AsyncTimeout</span>=<span class="hljs-string">"1"</span> </span> <span class="hljs-attribute">Language</span>=<span class="hljs-string">"C#"</span> <span class="hljs-attribute">AutoEventWireup</span>=<span class="hljs-string">"true"</span> <span class="hljs-attribute">CodeBehind</span>=<span class="hljs-string">"GizmosCancelAsync.aspx.cs"</span> <span class="hljs-attribute">Inherits</span>=<span class="hljs-string">"WebAppAsync.GizmosCancelAsync"</span> %> |

The following code shows the GizmosCancelAsync.aspx.cs file.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | <span class="hljs-function"><span class="hljs-keyword">protected</span> <span class="hljs-keyword">void</span> <span class="hljs-title">Page_Load</span>(<span class="hljs-params"><span class="hljs-keyword">object</span> sender, EventArgs e</span>) </span>{ RegisterAsyncTask(<span class="hljs-keyword">new</span> PageAsyncTask(GetGizmosSvcCancelAsync)); } <span class="line-highlight"><span class="hljs-function"><span class="hljs-keyword">private</span> <span class="hljs-keyword">async</span> Task <span class="hljs-title">GetGizmosSvcCancelAsync</span>(<span class="hljs-params">CancellationToken cancellationToken</span>)</span> </span>{ <span class="hljs-keyword">var</span> gizmoService = <span class="hljs-keyword">new</span> GizmoService(); <span class="line-highlight"> <span class="hljs-keyword">var</span> gizmoList = <span class="hljs-keyword">await</span> gizmoService.GetGizmosAsync(cancellationToken);</span> GizmosGridView.DataSource = gizmoList; GizmosGridView.DataBind(); } <span class="hljs-function"><span class="hljs-keyword">private</span> <span class="hljs-keyword">void</span> <span class="hljs-title">Page_Error</span>(<span class="hljs-params"><span class="hljs-keyword">object</span> sender, EventArgs e</span>) </span>{ Exception exc = Server.GetLastError(); <span class="hljs-keyword">if</span> (exc <span class="hljs-keyword">is</span> TimeoutException) { <span class="hljs-comment">// Pass the error on to the Timeout Error page</span> Server.Transfer(<span class="hljs-string">"TimeoutErrorPage.aspx"</span>, <span class="hljs-literal">true</span>); } } |

In the sample application provided, selecting the GizmosCancelAsync link calls the GizmosCancelAsync.aspx page and demonstrates the cancelation (by timing out) of the asynchronous call. Because the delay time is within a random range, you might need to refresh the page a couple times to get the time out error message.

Server Configuration for High Concurrency/High Latency Web Service Calls

To realize the benefits of an asynchronous web application, you might need to make some changes to the default server configuration. Keep the following in mind when configuring and stress testing your asynchronous web application.

- Windows 7, Windows Vista, Window 8, and all Windows client operating systems have a maximum of 10 concurrent requests. You’ll need a Windows Server operating system to see the benefits of asynchronous methods under high load.

- Register .NET 4.5 with IIS from an elevated command prompt using the following command:

%windir%\Microsoft.NET\Framework64 \v4.0.30319\aspnet_regiis -i

See ASP.NET IIS Registration Tool (Aspnet_regiis.exe) You might need to increase the HTTP.sys queue limit from the default value of 1,000 to 5,000. If the setting is too low, you may see HTTP.sys reject requests with a HTTP 503 status. To change the HTTP.sys queue limit:

- Open IIS manager and navigate to the Application Pools pane.

- Right click on the target application pool and select Advanced Settings.

- If your application is using web services or System.NET to communicate with a backend over HTTP you may need to increase the connectionManagement/maxconnection element. For ASP.NET applications, this is limited by the autoConfig feature to 12 times the number of CPUs. That means that on a quad-proc, you can have at most 12 * 4 = 48 concurrent connections to an IP end point. Because this is tied to autoConfig, the easiest way to increase

maxconnectionin an ASP.NET application is to set System.Net.ServicePointManager.DefaultConnectionLimit programmatically in the fromApplication_Startmethod in the global.asax file. See the sample download for an example. - In .NET 4.5, the default of 5000 for MaxConcurrentRequestsPerCPU should be fine.